CAT Modelling in the Cloud Era

These days more and more of our digital lives are migrating to the cloud. We can get our music and entertainment from an app store, we can share files through DropBox and collaboratively edit documents through Google’s App Engine. The same trend is happening in the business world with companies using cloud hosted solutions in a number of different flavours be it Software as a Service (SaaS), Platform as a Service (PaaS) or Infrastructure as a Service (IaaS).

This wave is now about to break over the remote coastline of the CAT modelling industry. Conflicting forces are driving this wave. On the one hand we have rising computational requirements required for today’s increasingly complex and detailed modelling. At the same time, regulators, APRA included, are demanding a deeper understanding of model assumptions and results, and this, in turn, drives a need for more frequent and faster model runs.

Cloud-based CAT modelling solutions are beginning to emerge to satisfy this need. AIR Worldwide is promoting their new Touchstone platform as being able to be, at a company’s discretion, either cloud or locally based. A new initiative from the London-based start-up, Oasis is also creating software that may be local or cloud hosted. The primary objective of Oasis is to create an open source framework and associated community around which it is much easier to develop and share models. Adopters are free to deploy the framework as they see fit.

The most far-reaching and ambitious initiative is that of market leader RMS’ cloud-only RMS(one) platform (www.rms.com). Perhaps even more significantly RMS has decided that their platform, although proprietary, will also be able to host models from elsewhere, be they developed in-house by clients or from other third parties like Risk Frontiers. The goal is to allow users to develop a view of risk that incorporates more perspectives than just those of RMS.

If a company would like to employ Risk Frontiers’ models on such a platform, it is simply a matter of enabling it for them, in much the same way apps are made instantly available from a smartphone’s app store.

Risk Frontiers has decided to make our initial foray into the CSAT modelling cloud space by releasing our current suite of five Australian loss models (Tropical Cyclone, Earthquake, Hail, Bushfire and Flood) on RMS(one). In what follows we take a deeper dive into the RMS platform and how our integration efforts are proceeding.

Since RMS(one) is a cloud-only platform let’s consider some of the consequences of moving CAT modelling to the cloud. Obviously it means exposures and analysis results are now stored in the cloud and there is some cost and effort involved in setting that process up and then some inertia if you were to consider moving to another platform. There may also be company policy hurdles governing where data may be physically stored. If exposure data is detailed enough to be personally identifiable, some jurisdictions have laws governing how and where such data is stored.

Since data is now housed externally, we also have the usual concerns when moving to the cloud: We need to make sure we are comfortable with the level of security provided both for authentication – knowing who the user is and that they are who they say they are – and authorisation – allowing different levels of access for different users. We also need to be happy that the system will be always available when we need it.

Since data is now housed externally, we have the usual concerns of moving to the cloud: making sure we are comfortable with the level of security provided and being confident that the system will be always available when we need it.

The triplet of concerns – privacy, security and availability – and the reputational risk that this entails when RMS subsumes the housing of client data means that they are taking them very seriously indeed. They have in fact decided that publicly available cloud infrastructure cannot guarantee these concerns to a sufficient level and have instead decided to build their own data-centres from scratch – a private cloud in other words.

The data centres are located in physically hardened tier three facilities meaning all systems have redundancy so there is no single point of failure, and the facility downtime is guaranteed to be less than 1.6 hours per year.

All data is encrypted at rest – that is when it is stored in the data centre and as it travels down the wire.

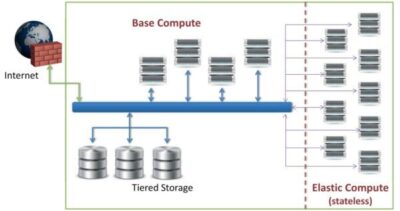

A graphical representation of the data centre (Figure 1) shows some features of the private cloud infrastructure that RMS has built.

Figure 1

A cloud infrastructure means the user experience can be extended to a very wide range of devices that are immediately accessible.

The diagram also shows what happens during busy times, for example 1/1 or 1/7 renewals. RMS has modelled the compute requirements and seen that to accommodate the possible peak demand, there should be a mechanism to draw extra compute capacity from the public cloud.

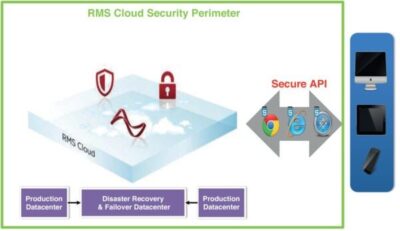

A cloud infrastructure also means the user experience can be extended to a very wide range of devices be they tablet, smart phones or conventional desktops (see Figure 2).

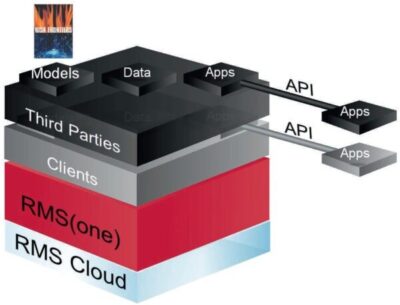

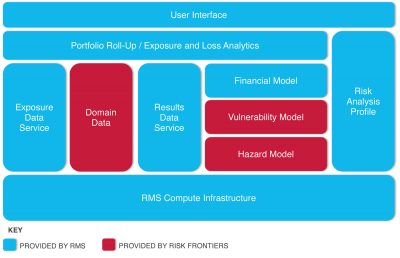

The complete RMS(one) technology stack looks something like that shown in Figure 3. At the base level we have the physical RMS private cloud infrastructure. Built on top of that is a system dubbed the analytics operating system (AoS), which takes care of core tasks, the essential systems for allowing the storing of exposure data, running models and apps, and so on. Above that lies the APIs for the client environment that allows access to models, data and apps.

In a similar vein there are the APIs that allow third parties to provide models, data and apps on the platform. This is where Risk Frontiers comes in as the first third party model provider on RMS(one).

It is expected that the open nature of the APIs and Model Development Kit (or MDK– a bundle of APIs, test projects and tools with a specific model development focus) will generate a diverse ecosystem surrounding the platform. A few of the possibilities that are enabled by the APIs for both end-users and third party developers alike, enable you to:

- develop models and make them available to the industry at large – just as Risk Frontiers is doing;

- develop your own internal models available only to your company. In this respect, MunichRe announced last year that they will be transitioning all their internal models onto RMS(one);

- extend the User Interface by making custom ‘widgets’;

- use RMS widgets in your own apps – create mashups in other words; and

- deeply integrate your upstream and downstream in-house IT systems.

RMS is now very explicitly nurturing this emerging ecosystem, with an in-house team devoted to the endeavour. The goal is the kind of richness seen in say the smart phone app stores that make them such a compelling product to buy into.

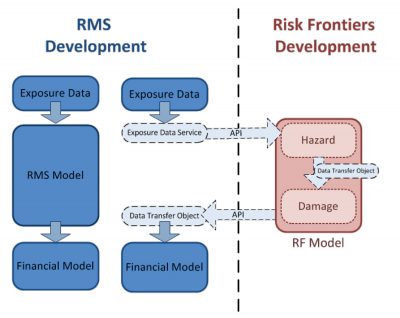

Figure 4 gives a view of the model development process showing how independence is maintained technically. Risk Frontiers still designs, codes and maintains our models and data privately and independently of RMS; we only interact with the RMS(one) platform through publicly defined APIs. We obtain exposures from the platform through the Exposure Data Service APIs and provide ground up losses back to the platform with a further set of APIs.

Figure 2: Accessing RMS(one) only occurs through secure APIs using HTML5. This allows access by a wide range of devices.

Figure 3: The RMS(one) technology stack.

Figure 4: Diagram showing how independence is maintained in the development process through the use of APIs and thus how IP remains within both companies’ respective silos.

The platform has also been designed with modularity in mind. Different third parties may elect to implement different kinds of modules depending on their particular strengths.

Figure 5: Modules implemented by Risk Frontiers to be able to present models on RMS(one). Other companies may choose to implement a different set of modules.

With a cloud based infrastructure, the sharing of views of risk becomes much easier. Once an analysis has been completed, because all data is already in the cloud, it is no longer necessary to bundle up multi-gigabyte packages of exposures and results. Sharing results with partners, clients, regulators and so on becomes as simple as sharing a link.

A CAT model’s raison d’être is to provide a synthetic catalogue of plausible events in sufficient number that when combined with a portfolio of exposures the user can derive exceedance loss statistics with reasonable confidence. Depending on the peril being modelled each catalogue will have quite different characteristics in the locations an event would impact, and how the events are distributed in time. It goes without saying that it is therefore important to understand the various model assumptions and what they cover – a point that APRA wishes to reinforce with their current regulatory changes.

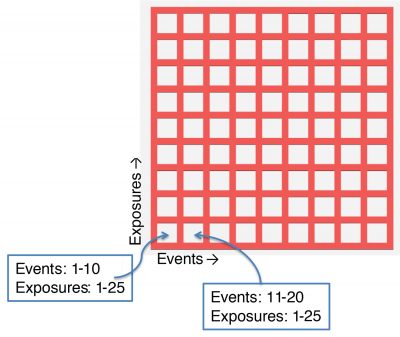

Risk Frontiers’ current crop of models generally have a catalogue spanning a simulation time period of 50,000 years, which can mean hundreds of thousands or even millions of events in a catalogue. Each event covers many multiple locations, and so combining this with a portfolio of exposures that may extend to tens of thousands, hundreds of thousands or even more individual risks explains why this is a data and computationally expensive exercise.

However the problem can be divided into many smaller sub- problems and we can calculate each sub-problem in parallel: we can naturally partition a model analysis by the events in our catalogue and the exposures in our portfolio – this is shown in graphical form in Figure 6.

Figure 6: Graphical representation of dividing a CAT model analysis into smaller independent sub-analyses by partitioning the input exposures and the set of events that impact them.

Moving IT infrastructure to the cloud promises some big benefits that promote a more agile business. First there are those benefits that are true in general of cloud migration: You are freed from interacting with the IT upgrade cycle – you need not own and maintain the hardware required, nor worry whether your current servers support the latest software version. The data centre will always have the latest version of the software running on up-to date hardware. Also, as we have seen, in general the system is much more accessible – requiring only that a device has a web browser to access the system. And of particular importance in the CAT modelling arena is scalability; the ease with which the amount of computing power available may be dialled up or down to cover peak times or greater user demand is a huge positive.

Of particular importance in the CAT modelling arena is scalability; the ease with which the amount of computing power available may be dialled up or down to cover peak times or greater user demand is a huge positive.

One of course has to be careful of the usage costs for a cloud platform but they have to be compared with capital heavy costs of maintaining in-house IT infrastructure and so this needs to be analysed in a total cost of ownership exercise. Nevertheless it would seem to be a question of when not if that CAT modelling becomes another industry to live its life in the cloud.

CPD: Actuaries Institute Members can claim two CPD points for every hour of reading articles on Actuaries Digital.